News Courtesy of moz.com:

Posted by Tom-Anthony

During a discussion with Google’s John Mueller at SMX Munich in March, he told me an interesting bit of data about how Google evaluates site speed nowadays. It has gotten a bit of interest from people when I mentioned it at SearchLove San Diego the week after, so I followed up with John to clarify my understanding.

The short version is that Google is now using performance data aggregated from Chrome users who have opted in as a datapoint in the evaluation of site speed (and as a signal with regards to rankings). This is a positive move (IMHO) as it means we don’t need to treat optimizing site speed for Google as a separate task from optimizing for users.

Previously, it has not been clear how Google evaluates site speed, and it was generally believed to be measured by Googlebot during its visits — a belief enhanced by the presence of speed charts in Search Console. However, the onset of JavaScript-enabled crawling made it less clear what Google is doing — they obviously want the most realistic data possible, but it's a hard problem to solve. Googlebot is not built to replicate how actual visitors experience a site, and so as the task of crawling became more complex, it makes sense that Googlebot may not be the best mechanism for this (if it ever was the mechanism).

It should be no mystery that optimized websites rank better than ones that perform poorly. It may not be a huge factor, but every positive ranking signal does add up. After each website I build, I test it through Google’s PageSpeed Insights (as well as other benchmarking websites). I’ve always assumed that it was Googlebot measuring the speed of a website. However, Googlebot doesn’t accurately represent the experience of an actual visitor. There is something to be said for balancing user experience and loading speed. Now that actual user data is used to gauge performance, developers don’t have to worry about catering to both Google and visitors.

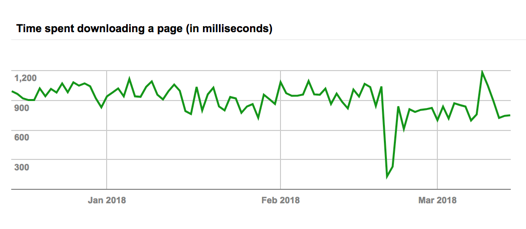

For people worried about privacy, the article does specify that data is gathered from users who opt-in to provide the information. It was also explained that when Google crawls a website, different data may be fetched which is different from the rending of a page. The download performance graph you see in Google’s Search Console, therefore, isn’t an accurate representation of a visitor’s experience.

The final point in the article urges web designers and developers to start focusing on users when it comes to optimization. Yes, as far as indexing and crawling, you want to make sure Googlebot doesn’t encounter any errors on your website pages. However, with real-world metrics now available, certain benefits like HTTP/2 will give your website a boost. Previously, this was not possible because of how Google crawls websites.